DeepSeek has taken the AI world by storm, sparking debate over whether we’re on the brink of a technological revolution.

Love it or not, this new Chinese AI model stands apart from anything we’ve seen before. In this post, we’ll break down what makes DeepSeek different from other AI models and how it’s changing the game in software development.

Reasoning model vs. standard language model

If you’ve had a chance to try DeepSeek Chat, you might have noticed that it doesn’t just spit out an answer right away. Instead, it walks through the thinking process step by step. This approach is known as the “chain of thought.”

That’s because a reasoning model doesn’t just generate responses based on patterns it learned from massive amounts of text. Instead, it breaks down complex tasks into logical steps, applies rules, and verifies conclusions.

It’s the same way you’d tackle a tough math problem—breaking it into parts, solving each step, and arriving at the final answer.

Why Are Reasoning Models a Game-Changer?

Reasoning models deliver more accurate, reliable, and—most importantly—explainable answers than standard AI models. Instead of just matching patterns and relying on probability, they mimic human step-by-step thinking.

Until recently, this was a major challenge for AI. But now, reasoning models are changing the game.

Here are the key benefits that make them a breakthrough in AI:

They generalize better

Generalization means an AI model can solve new, unseen problems instead of just recalling similar patterns from its training data.

Before reasoning models, AI could solve a math problem if it had seen many similar ones before. But if you rephrased the question, the model might struggle because it relied on pattern matching rather than actual problem-solving.

A reasoning model, on the other hand, analyzes the problem, identifies the right rules, applies them, and reaches the correct answer—no matter how the question is worded or whether it has seen a similar one before.

Their answers are more reliable

Unlike standard AI models, which jump straight to an answer without showing their thought process, reasoning models break problems into clear, step-by-step solutions. They can even backtrack, verify, and correct themselves if needed, reducing the chances of hallucinations.

Plus, because reasoning models track and document their steps, they’re far less likely to contradict themselves in long conversations—something standard AI models often struggle with.

They are better for complex decision-making

Reasoning models excel at handling multiple variables at once. By keeping track of all factors, they can prioritize, compare trade-offs, and adjust their decisions as new information comes in.

Standard AI models, on the other hand, tend to focus on a single factor at a time, often missing the bigger picture. They also struggle with assessing likelihoods, risks, or probabilities, making them less reliable.

Hire top AI talent

Syndicode has expert developers specializing in machine learning, natural language processing, computer vision, and more. Whether you’re building an AI-powered app or optimizing existing systems, we’ve got the right talent for the job. Let’s hop on a quick call and discuss how we can bring your project to life!

Set up a callDeepSeek vs. other AI models: When is it the right choice?

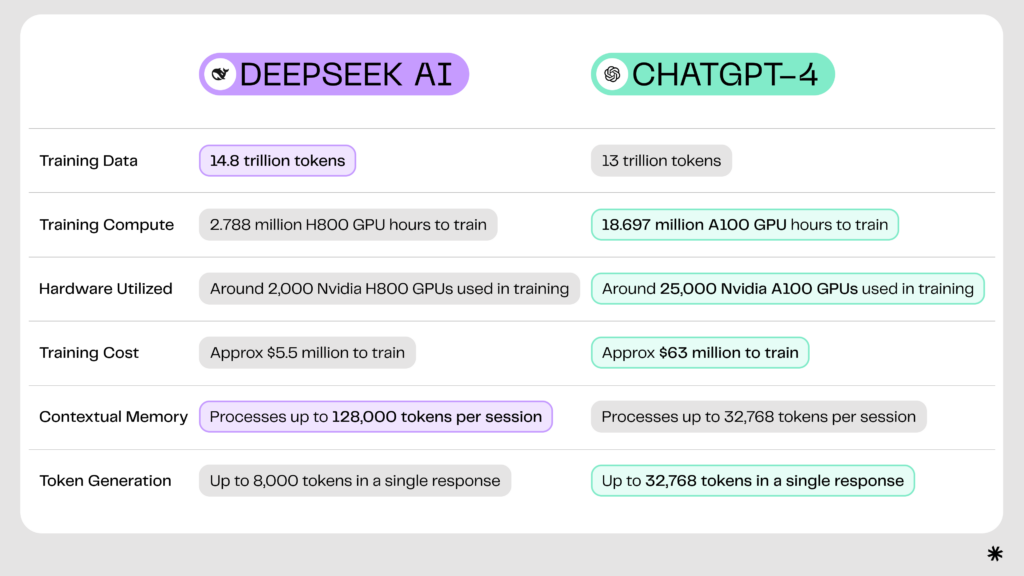

DeepSeek isn’t the only reasoning AI out there—it’s not even the first. It competes with models from OpenAI, Google, Anthropic, and several smaller companies.

In a previous post, we covered different AI model types and their applications in AI-powered app development. Now, let’s compare specific models based on their capabilities to help you choose the right one for your software.

- Accessing web data in real time

Not all AI models can search the web or learn new information beyond their training data. This is crucial for fact-checking and staying up-to-date.

- Models that can search the web: DeepSeek, Gemini, Grok, Copilot, ChatGPT.

- Models that cannot: Claude.

- Solving non-math problems

For tasks like document review and pattern analysis, DeepSeek vs. OpenAI’s GPT-4o perform equally well. However, Gemini and Claude may require extra supervision—it’s best to ask them to verify and self-correct their responses before fully trusting the output.

- Solving math problems

In our testing, we used a simple math problem that required multimodal reasoning. Both DeepSeek R1 and OpenAI’s GPT-4o solved it correctly. Based on online feedback, most users had similar results.

Gemini 2.0 Flash and Claude 3.5 Sonnet handle purely mathematical problems well but may struggle when a solution requires creative reasoning.

- Generating code

Coding is among the most popular LLM use cases. Before DeepSeek, Claude was widely recognized as the best for coding, consistently producing bug-free code.

Here’s how the top models compare:

- Claude: Strongest at writing clean, error-free code;

- Gemini: Excels at explaining code rather than writing it;

- OpenAI’s GPT-4o: Faster than most models when generating code;

- DeepSeek: Built specifically for coding, offering high-quality and precise code generation—but it’s slower compared to other models.

You can find performance benchmarks for all major AI models here.

- Writing stories

All LLMs can generate text based on prompts, and judging the quality is mostly a matter of personal preference. However, AI models tend to fall into repetitive phrases and structures that show up again and again.

We tested a small prompt and also reviewed what users have shared online. Here’s what we found:

- OpenAI models produce natural-sounding text with a balanced level of detail;

- Gemini tends to overuse literary and flowery language;

- DeepSeek Chat has a distinct writing style with unique patterns that don’t overlap much with other models.

Limitations of DeepSeek (and, honestly, any other AI model)

Compute vs. efficiency trade-off

Unlike simple classification or pattern-matching AI, reasoning models go through multi-step computations, which dramatically increase resource demands. The more accurate and in-depth the reasoning, the more computing power it requires.

This means there’s always a trade-off—optimizing for processing power often comes at the cost of resource utilization and speed.

| Improving efficiency | Using less compute power |

|---|---|

| Large, deep networks capture more abstract reasoning | The smaller the model the less memory, power, and GPU hours it uses to process each query |

| Allowing AI to break problems into logical sub-tasks improves accuracy | Fewer reasoning steps reduce inference time, simplifying real-time reasoning |

| Retrieval augmented generation enables fetching real-time knowledge improving accuracy | Generating responses solely from pre-trained internal knowledge saves compute |

| Adding rule-based layers improves logical consistency | Pure end-to-end deep learning reduces the amount of processing per reasoning step |

| Cross-checking multiple reasoning paths reduces hallucinations | Following only one reasoning trajectory without verifying alternative solutions saves processing power |

Balancing correctness and hallucinations

A general-purpose AI must handle a wide range of tasks—from solving math problems to writing creative text. Reasoning AI improves logical problem-solving, making hallucinations less frequent than in older models. However, this also increases the need for proper constraints and validation mechanisms.

Here’s the challenge:

- More structured logic = higher accuracy, but if the AI isn’t well-constrained, it might invent reasoning steps that don’t actually make sense.

- More reliance on verified facts = fewer hallucinations, but this could make AI reject unconventional yet valid solutions, limiting its usefulness for creative work.

Striking the right balance is key to making AI both accurate and adaptable.

| Better AI correctness | Higher chance of hallucinations |

| Stronger reliance on structured logic reduces errors in well-defined domains | If not well-constrained, models invent reasoning steps even when they don’t make sense |

| Models break down problems into sequential steps, improving accuracy | Errors in one reasoning step propagate, causing a chain reaction of hallucinations |

| Models designed for causal inference improve decision-making | If the model overfits to correlations, it may generate incorrect causal links |

| If models cross-check their logic, they reduce errors | If they lack self-verification mechanisms, they confidently present flawed logic |

| If trained on high-quality proofs and logical structures, they perform better | Incomplete data can cause them to guess missing reasoning steps incorrectly |

Compromise between data quality & bias

The general rule is simple: better training data = better AI accuracy. However, reducing bias often means limiting data diversity, which can hurt the model’s ability to provide high-quality answers across a wide range of topics.

For example, a medical AI trained primarily on Western clinical trials may struggle to accurately diagnose patients from underrepresented populations.

| Improving data quality | Increasing bias |

| Exposing the model to multiple viewpoints increases reasoning accuracy | Some sources may be unreliable or biased, reinforcing incorrect reasoning patterns |

| Removing harmful stereotypes and incorrect generalizations improves quality | Over-filtering can remove valid but controversial information, reducing reasoning flexibility |

| Helping models specialize in legal, medical, or scientific reasoning improves quality | Narrow training data may introduce biases from a specific discipline or region |

| Ensuring different demographic and ideological perspectives are represented improves quality | Overcompensation can distort reasoning by forcing artificial neutrality where none exists |

| Improving factual correctness by using verified knowledge bases improves quality | Curated knowledge bases (e.g., encyclopedias, academic papers) may reflect historical or systemic biases |

Give and take between interpretability vs. performance

Reasoning models don’t just match patterns—they follow complex, multi-step logic. But the more sophisticated a model gets, the harder it becomes to explain how it arrived at a conclusion.

On the flip side, prioritizing interpretability often means relying too much on explicit logical rules, which can limit performance and make it harder for the AI to handle new, complex problems.

The challenge is finding the right balance—making AI transparent enough to trust without sacrificing its problem-solving power.

| Improving model interpretability | Reducing performance |

| Ensuring transparent, structured reasoning with clear rules improves interpretability | Overreliance on rules limits the model’s adaptability; it starts struggling with ambiguous, real-world problems |

| Using neural networks (deep learning) improves model accuracy and generalization | Deep learning causes the model to become a “black box” with no clear way to explain why a decision was made |

| Hybrid AI combines structured logic with deep learning, making some steps interpretable | Hybrid AI requires more compute power and adds complexity to training |

| Focusing on step-by-step traceability allows humans to audit AI decisions and detect errors | It slows down inference time, reducing efficiency |

| End-to-End Deep Learning helps improve model speed and high accuracy | It makes it harder to audit and diagnose when incorrect conclusions are made. |

How DeepSeek can help you make your own app?

One of DeepSeek’s biggest advantages is that it’s open-source—meaning anyone can take the original code, modify it, and adapt it to their specific needs. This creates a cycle where each improvement builds on the last, leading to constant innovation.

Over time, this leads to a vast collection of pre-built solutions, allowing developers to launch new projects faster without having to start from scratch.

And of course, you can deploy DeepSeek on your own infrastructure, which isn’t just about using AI—it’s about regaining control over your tools and data. Running DeepSeek on your own system or cloud means you don’t have to depend on external services, giving you greater privacy, security, and flexibility.

You can use DeepSeek’s models in software development in three main ways:

- Fine-tune DeepSeek to fit your specific use case;

- Keep data secure by hosting the model in-house;

- Leverage open-source tools to speed up your build process.

Want to get started? Here’s how to use DeepSeek R1 to build software:

Step 1: Define your software requirements

The first step in building any software is documenting what it should do—including its features, constraints, and user expectations.

DeepSeek chat can help by analyzing your goals and translating them into technical specifications, which you can turn into actionable tasks for your development team.

Here’s a brief list of what you should do:

- Identify key features and functionalities from your requirements;

- Break them into smaller, manageable tasks (e.g., API development, UI design, database setup);

- Prioritize tasks based on dependencies and project roadmap;

- Define acceptance criteria to ensure clear expectations;

- Use JIRA, Trello, Asana, ClickUp, or another tool to organize tasks;

- Assign tasks to the right team members (backend, frontend, DevOps, QA);

- Set milestones and deadlines to keep the project on track;

- Hold a kickoff meeting to align on goals;

- Ensure everyone understands the requirements and expectations;

- Allow developers to provide feedback—they might suggest better solutions.

At Syndicode, we call this the Discovery Phase—a crucial step at the start of every software project. It also helps uncover potential pitfalls and opportunities early on.

With over a decade of experience, we’ve built an efficient process for quickly gathering, prioritizing, and refining requirements. This ensures your vision is clear, realistic, and aligned with industry best practices.

So, if you want to refine your requirements, stay ahead of market trends, or ensure your project is set up for success, let’s talk.

Step 2: Automate code generation

DeepSeek AI speeds up and improves code generation, producing clean, well-documented code in your preferred programming language. Its specialized model, DeepSeek-Coder, allows you to analyze requirements, generate code snippets, and streamline development workflows.

Here’s how to use DeepSeek R1 for coding:

- Integrate DeepSeek API to get real-time code suggestions as you type;

- Detect redundant code and improve algorithms and structures;

- Auto-generate API endpoints, UI components, and database models;

- Full-stack development – Generate UI, business logic, and backend code.

Once the AI generates code, it needs to be integrated into a larger software architecture and tested to ensure everything works together. While this may seem straightforward, it requires technical expertise to:

- Review and refine AI-generated code to meet industry standards;

- Ensure the software is scalable and future-proof;

- Handle complex integrations and customizations that go beyond AI’s capabilities.

If your team lacks experience in these areas, Syndicode’s AI development experts can help fine-tune the code and optimize your project.

Step 3: Ensure collaboration and version control

DeepSeek AI can streamline code reviews, merge conflict resolution, change tracking, and DevOps integration. By automating these processes, it helps teams work more efficiently and maintain high-quality code.

Here’s how DeepSeek API enhances collaboration:

- Pull request analysis – Checks for security issues, code quality, and performance bottlenecks;

- Bug detection – Identifies coding style violations, logic errors, and potential bugs before merging;

- Standardization – Helps maintain consistent coding practices across teams;

- Merge conflict resolution – Automatically detects and resolves conflicts;

- Workflow optimization – Suggests improvements for commits, branches, and development processes;

- Change tracking – Generates summaries and updates so all team members stay informed;

- Automated testing – Runs regression tests before merging and flags high-risk commits for manual review.

Unfortunately, while DeepSeek chat can automate many technical tasks, it can’t replace human oversight, team engagement, or strategic decision-making. Maintaining a well-balanced workflow still requires experienced project management.

If you need help keeping your project on track and within budget, Syndicode’s expert team is here to assist.

Don’t let your project get delayed

Make sure your requirements are accurately translated into developer language with the help of an experienced development team. Schedule a call to learn how we ensure your product meets expectations.

Let’s talkStep 4: Optimize testing and debugging

DeepSeek AI can assist throughout the software testing lifecycle by automating test case generation, reducing manual effort, and identifying potential bugs. It also provides explanations and suggests possible fixes.

Integrate DeepSeek API to:

- Generate test cases automatically;

- Detect potential bugs and suggest fixes;

- Reduce manual testing efforts.

Be careful: while AI helps with bug detection, it can’t independently fix issues because it struggles with:

- Understanding whether an error is due to a network issue, database failure, or incorrect authentication tokens;

- Considering long-term architectural consequences, often suggesting short-term fixes that create technical debt;

- Aligning logic with business rules—it may not catch features that work technically but violate business requirements;

- Prioritizing fixes effectively—AI flags issues based on frequency, not on how critical they are to the system.

If you need expert oversight to ensure your software is thoroughly tested across all scenarios, our QA and software testing services can help.

Step 5: Deploy and monitor your software

DeepSeek AI can assist with deployment by suggesting optimal schedules to minimize downtime, predicting computing power needs to prevent latency, and identifying failure patterns before they cause issues.

Sadly, while AI is useful for monitoring and alerts, it can’t design system architectures or make critical deployment decisions. It struggles with:

- Structuring microservices, databases, APIs, and networking components to fit project needs;

- Determining the best course of action when issues arise—AI can alert you, but humans still need to make key decisions.

For anything beyond a proof of concept, working with a dedicated development team ensures your application is properly structured, scalable, and free from costly mistakes.

DeepSeek is a powerful tool—when combined with expert team

DeepSeek has shifted AI power away from corporations, giving users more control, privacy, and customization. However, it doesn’t solve one of AI’s biggest challenges—the need for vast resources and data for training, which remains out of reach for most businesses, let alone individuals.

So, while China’s DeepSeek AI is a powerful tool, it’s not a replacement for human expertise. By partnering with a software development company, you can combine AI’s efficiency with human creativity, experience, and strategic thinking. This ensures your software is not only built faster but also meets the highest standards of quality, scalability, and user satisfaction.

That said, DeepSeek is definitely the news to watch.

Frequently asked questions

-

Can I use DeepSeek for my business app?

Yes, China’s DeepSeek AI can be integrated into your business app to automate tasks, generate code, analyze data, and enhance decision-making. Since it’s open-source, you can customize it to fit your specific needs. However, deploying and fine-tuning DeepSeek requires technical expertise, infrastructure, and data. If your team lacks AI experience, partnering with an AI development company can help you leverage DeepSeek effectively while ensuring scalability, security, and performance.

-

How long does AI-powered software take to build?

The development time for AI-powered software depends on complexity, data availability, and project scope. A simple AI-powered feature can take a few weeks, while a full-fledged AI system may take several months or more. The process includes defining requirements, training models, integrating AI, testing, and deployment. Using pre-trained models like DeepSeek can speed up development, but fine-tuning and customization still require time. Working with an experienced AI development team can help streamline the process and ensure faster, high-quality delivery.