Elon Musk’s call for pausing the development of AI systems has revealed a growing wave of concern regarding the potential misuse of the technology among experts and society. According to a recent study, three out of five people don’t trust or are unsure about artificial intelligence.

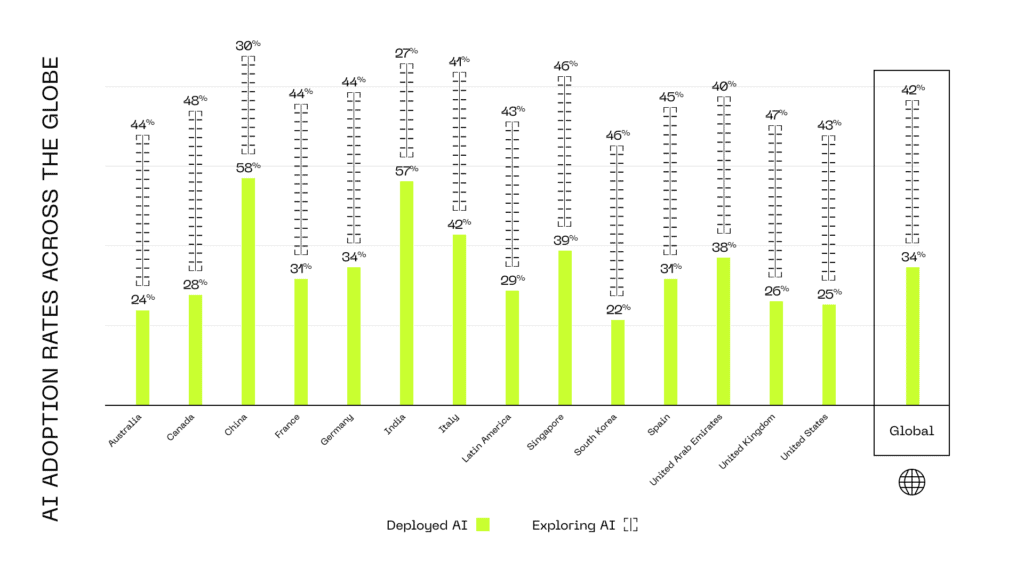

At the same time, over 35% of businesses already use AI in their operations, and over 40% are exploring the technology.

Given the recent concerns, should companies terminate AI adoption? Is it possible to protect one’s business from the possible side effects of the new technology while continuing to reap the benefits?

In this post, we will delve into the risks associated with the continuous advancement of artificial intelligence, examine why companies are eager to be AI-first, and contemplate potential strategies to mitigate the risks and maximize the benefits.

The Potential Risks of Adopting AI for Your Business

Errors

According to a study by Standford University, developers with access to an AI assistant were likely to produce less secure code than those who wrote it manually. From a business standpoint, coding errors can create a significant financial crisis, resulting in slow software or, even worse—compromised safety.

False information

AI software provides information based on patterns and relationships it has learned from the data it has been fed. The majority of AI tools can’t verify or fact-check information, making them prone to bias and misinformation. As a result, relying on AI for information can cause massive damage to a company’s reputation.

Cybersecurity risk

Artificial intelligence can enhance the capabilities of cybercriminals and aggravate the challenge of keeping business data safe. Thus, AI-based tools may be manipulated to scope vulnerabilities in applications, used for deep fake creation, or even to mimic the writing style of authorities, increasing the success rate of phishing attacks.

Unexpected behavior

Current generative AI models aim to consider the context and adapt to the tone in which it’s being asked to respond. It can lead to confusing results, especially in long sessions with topic changes, and lead to user disappointment. A prominent example is Microsoft’s Bing AI, accusing and gaslighting users. A brand having a chatbot behave like this will inevitably feel a strain on its customer relationship.

Key benefits of AI for business

Despite the concerns, businesses continue to employ AI in their operations. Let’s look at what makes AI so tempting that companies seem willing to disregard its potential damage.

Increased business workflow efficiency

Artificial intelligence tools can automate routine operations, freeing more time for employees to focus on creative tasks. Thus, AI software has been widely used for market research, processing, and categorizing data at speeds humans cannot.

AI-based chatbots care for repetitive customer queries, and AI assistants provide personalized information or help users with specific tasks.

Generative AI can also write content or craft computer code without human involvement. Artificial intelligence leads to faster turnaround times and cost savings if used correctly.

Improved customer experience

AI can analyze customer data and preferences to provide personalized recommendations and experiences. Then, it can make sentiment analysis based on customer feedback helping businesses better understand customer preferences and needs.

Additionally, the technology can identify trends and make predictions to simplify decision-making and facilitate profitability and growth.

Enhanced data protection

We wrote about cybersecurity risks presented by AI earlier in this post, but it can also be a powerful weapon against malicious activity. By analyzing large volumes of data, AI algorithms can identify anomalies and patterns indicating suspicious activity, enabling security teams to respond quickly and prevent damage.

How to leverage AI technology in your company?

The benefits of AI are substantial and may easily outweigh the potential risks. However, there are ways to increase the security of using AI and make it a valuable tool for driving revenue and profitability without compromising its effectiveness.

By following the tips below, you can ensure that your AI solutions are carefully deployed and managed, preventing unintended harm to your company’s reputation, workers, and clients.

1. Employ responsible AI practices

Ensure the AI system is designed with security in mind and translate the company’s values into development. While currently, there is no universally accepted framework to promote responsible AI, most tech leaders agree on the principles listed below.

- Comprehensiveness – it’s essential to prioritize clearly defined testing and governance criteria to prevent AI software from being hacked;

- Explicability – AI must be programmed to describe its purpose, rationale, and decision-making process in an easy-to-understand manner;

- Ethics – ethical AI processes involve procedures aimed at the search and elimination of bias in machine learning models;

- Efficiency – AI software must be able to run continuously and respond quickly to changes in the operational environment.

End-user firms may apply these principles by ensuring their AI provider practices responsible development and providing staff with instructions on safe AI implementation and usage. Many leading organizations, including Google, Microsoft, and Meta, have introduced their own guidelines for responsible AI application development that imply the abovementioned principles.

2. Maintain human oversight

While the discussions persist about whether AI systems should be made closed-loop, the reality is that AI, if left to its own devices, may become overly reliant on algorithms. Being only as good as the data it’s trained on; the technology can produce inaccuracies causing substantial harm.

Moreover, AI systems may be unable to handle novel or unexpected situations. So, human intervention is necessary to prevent causing damage. Numerous studies have shown that the human-in-the-loop (HITL) model is an option to ensure safe and accurate results while contributing to improving AI algorithms.

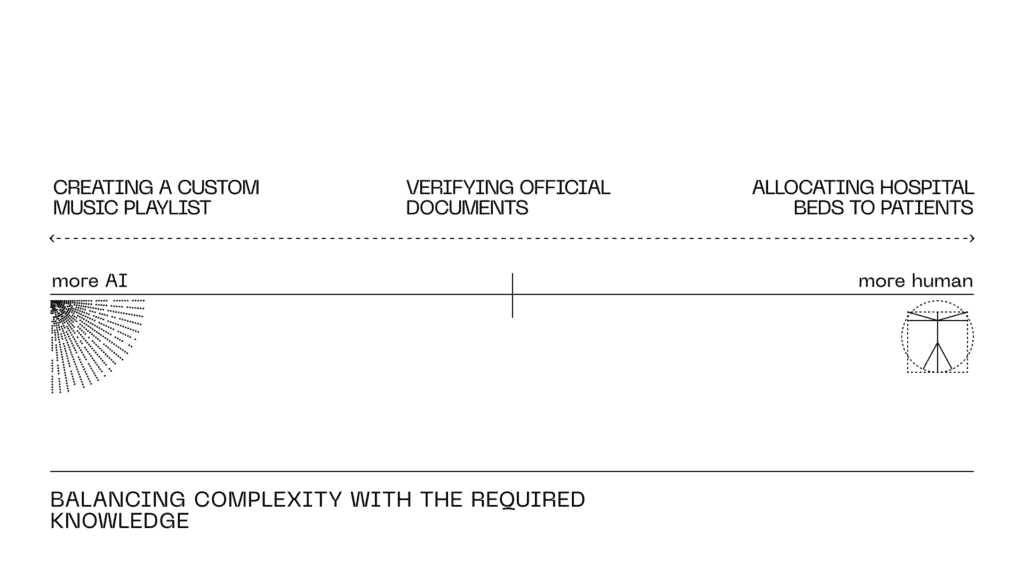

To determine how much the human should be in-the-loop, consider the following factors:

- The complexity of the AI solution

- The amount of damage the wrong decision can cause

- The amount of specialist knowledge required

3. Double-check if the use case is suitable for AI

Since AI technology has limitations, its implementation in some use cases may be economically inefficient or harmful. Examples of such cases may be as follows.

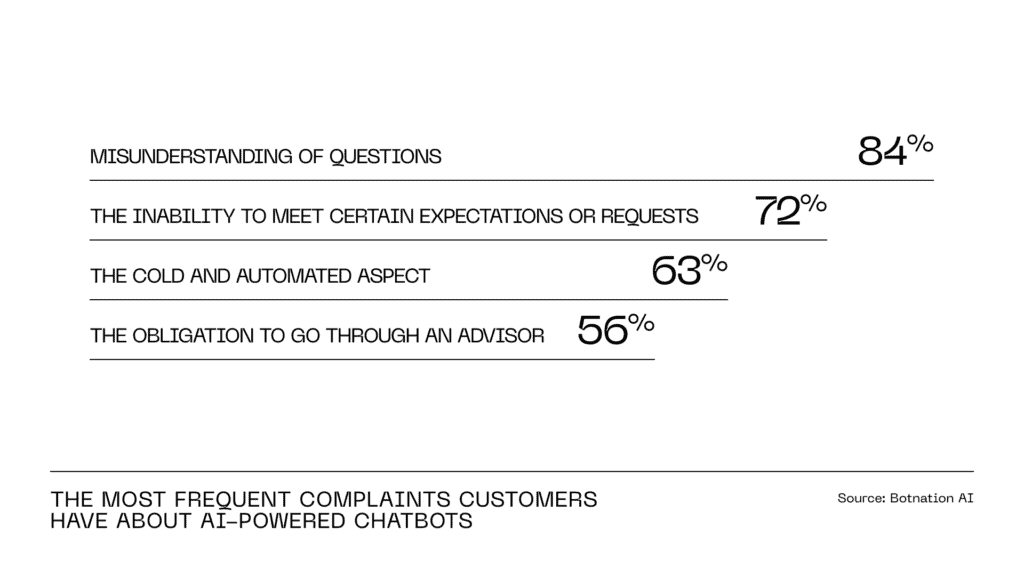

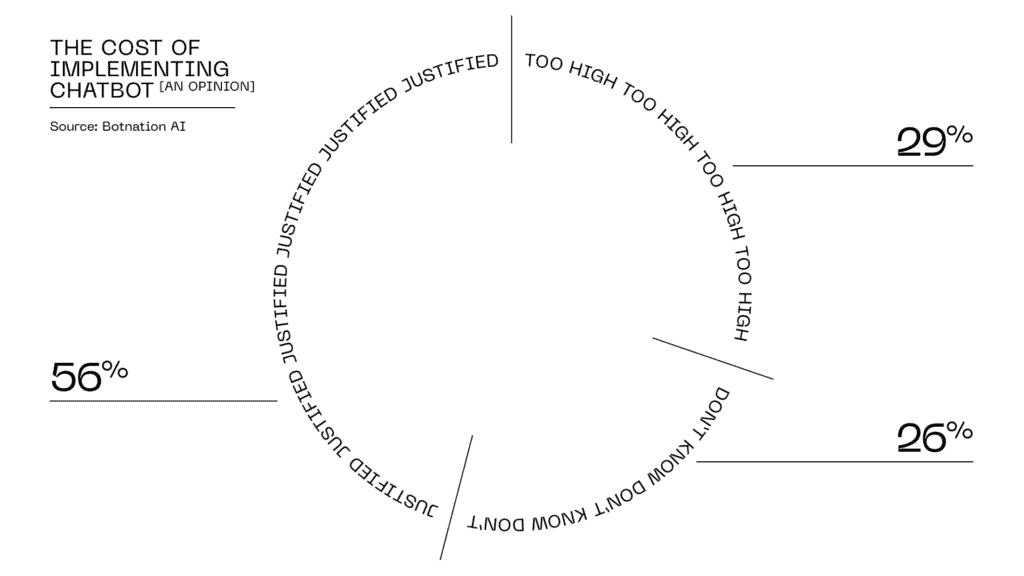

- Customer support conversations automation

AI cannot have an unbounded conversation. The viral ChatGPT skillfully navigates informal discussions, but where its knowledge comes short, it tends to resort to filling in the gaps. It is also unable to provide personal information, making it useless for account-related questions.

An upset customer may not have the patience or knowledge to carefully craft their request to get a better answer. When they come to a chat, they have likely exhausted self-service means and still haven’t found the direct answer.

So, for now, it’s better to hold off on AI for customer support and rely on the tested combination of chatbots and human representatives.

- Eliminating bias from a process

Bias based on gender, race, age, sexuality, and other factors can be a problem in various business areas, including recruitment and employee training. However, since supervised learning is usually used to train AI systems, taking a person out of the loop won’t solve the problem.

Quite the opposite, letting a machine model pick any available data without a person determining its ethical or business relevance can result in inaccurate and very biased behavior.

So, the use of AI is possible in this case, but only to assist in classification and prediction. A supervisor must regularly verify the absence of bias and mistakes in the outcomes.

- Small data analysis

Most AI models work by analyzing data and generalizing it to make predictions. If there’s not enough data, AI might not be able to generalize well to new and unseen information and provide faulty results.

Therefore, before investing in AI, you must determine how to generate training data for a high-quality model. That being said, you should hold off on using AI for a new line of business where you don’t know the right responses to the problems yet.

Conclusion

The pace of technological advancement is rapid, and protective regulations are struggling to keep up. At the same time, fierce competition compels businesses to adopt new technology quickly, even if it’s not fully understood. This approach exposes them to risks that may only become apparent over time.

However, adopting responsible AI practices and making deliberate choices can help businesses mitigate these risks. By prioritizing ethics, companies can enhance their credibility, increase customer loyalty, and ultimately drive profits in addition to data safety. In doing so, they also demonstrate a commitment to developing safe and beneficial products which can help attract top digital talent.

At Syndicode, we stay updated with the latest artificial intelligence and machine learning developments. Our team has a wealth of experience creating AI/ML-based solutions for businesses across various industries, such as retail, fashion, and entertainment. We prioritize a responsible approach to integrating these technologies into software solutions, ensuring their safety and business efficiency.